Ready to learn Machine Learning? Browse Machine Learning Training and Certification courses developed by industry thought leaders and Experfy in Harvard Innovation Lab.

Welcome to part five of Learning AI if You Suck at Math. If you missed part 1, part 2, part3, part4, and part5 be sure to check them out.

If you’ve followed parts of this series you know that you really don’t need a lot of math to get started with AI. You can dive right in with practical tutorials and books on the subject.

However, there are lots of reasons to learn mathematical notation.

Maybe you just want to stretch yourself and learn a new skill? Learning something outside of your comfort zone is a fantastic way to keep your mind sharp.

Or perhaps you’d like to start reading a few of the papers on arXiv? You might even want to implement an exciting new research paper idea instead of waiting for someone else to put it on Github.

To do that you’ll need to know how to read those funny little symbols.

Maybe the greatest reasons to learn math notation is that it let’s you express complex ideas in a very compact way.

Without it, it would take pages and pages to explain every equation.

Yet even with all the resources out there it can still be intimidating to face a string of those alien characters.

Have no fear. I’m here to help.

I’ll show you that learning these symbols is not as hard as you think. But there are a few things holding you back.

First, if you’re like me, you hated math as a kid. I’ve discovered the key reason is that my teachers never bothered to answer the most important question:

Why?

Why am I doing this? How does this apply to my life?

They just slapped a bunch of equations on the board and told me to memorize them. That didn’t work for me and I’m betting it doesn’t work for you.

The good news is that if you’re interested in the exciting field of AI it’s a great answer to that question!

Now you have a reason to learn and apply it to real world problems. The “why” is because you want to write a better image recognition program or an interface that understands natural language! Maybe you even want to write your own algorithms some day?

The second thing holding you back is the plethora of horrible explanations out there. The fact is most people are not very good at explaining things. Most of the time people define math terms with more math terms. This creates a kind of infinite loop of misunderstanding. It’s like defining the word “elephant” by saying “an elephant is like an elephant.” Great. Now I understand. Not!

I’ll help you get to firmer ground by relating it to the real world and using analogies to things that you already know.

I won’t be able to cover all the symbols you need in one article, so you’ll want to pick up this super compact guide to math symbols, Mathematical Notation: A Guide for Engineers and Scientists by Edward R. Scheinerman, if you haven’t already. (It was a late addition to my first Learning AI if you Suck at Math article, but it’s become one of my most frequently used books. It’s filled with highlights and dog eared pages. As my knowledge of various math disciplines expands I find myself going back to that book again and again.

Let’s get rolling.

To start with, what is an algorithm?

It’s really nothing more than a series of steps to solve a particular problem. You use algorithms all the time whether you know it or not.

If you need to pack lunch for the kids, drop them off at school and get your dry cleaning before heading to work you’ve outlined a series of steps unconsciously to get from the kitchen to the office. That’s an algorithm. If your boss gives you six assignments that are competing for your time, you have to figure out the best way to finish them before the end of the day by choosing which ones to do first, second, in parallel, etc. That’s an algorithm.

Why is that important? Because an equation is just a series of steps to solve a problem too.

Let’s start with some easy symbols and build up to some equations.

Math is about transforming things. We have an input and an output. We plug some things into the variables in our equation, iterate through the steps and get an output. Computers are the same way. Now, most of the magic behind neural nets comes from three branches of math:

- Linear Algebra

- Set theory

- Calculus

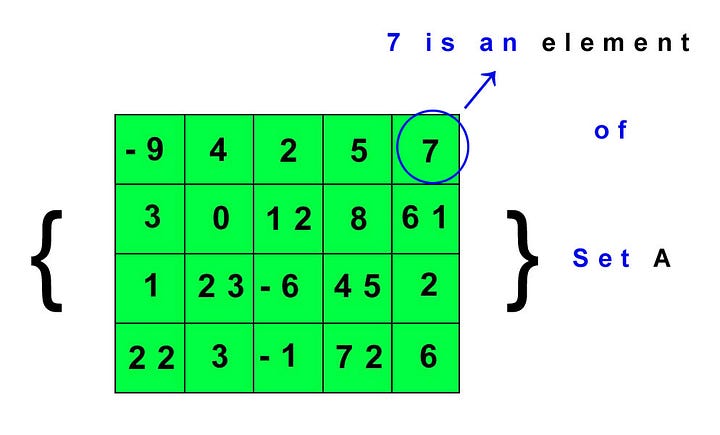

What’s a set? It’s a collection of things, usually enclosed by curly brackets {} or square brackets. (Math peeps don’t always agree on the best symbols for everything):

A Set

Remember that we looked at tensors in part 4? That’s a set.

A set is usually indicated by a capital letter variable such as A or B or V or W. The letter itself doesn’t much matter as long as you’re consistent.

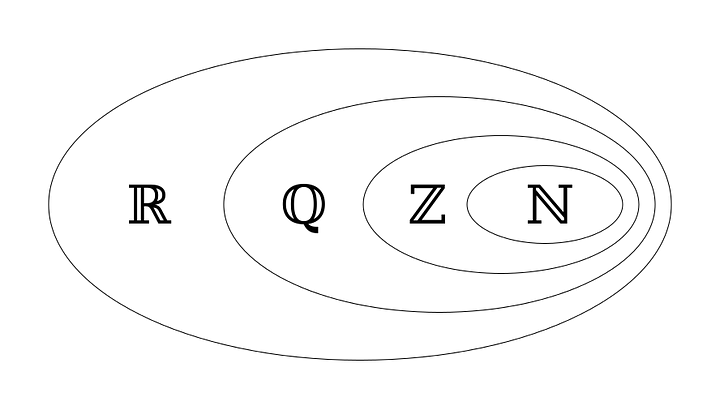

However there are certain capital letters and symbols reserved for important, well known sets of numbers, such as:

∅ = An empty set ( a set with nothing in it yet ). By the way that symbol is a Greek letter called “phi”. Greek letters are used frequently in math. You can see their upper and lowercase versions here.

R = All real numbers (Real numbers are pretty much every number that exists, including integers, fractions, transcendental numbers like Pi (π)(3.14159265…) but not including imaginary numbers (made up numbers to solve impossible equations) and infinity.

Z = All the integers (whole numbers without fractions, -1,-2, 0, 1, 2, 3 etc)

You can see a list of all the major reserved letters at the Math is Fun website.

All of these are sets and some of them are subsets, meaning they are wholly contained within the larger set like so:

Go ahead and look up what Q and N mean!

In this case we would say that Z (integers) is a subset of R (real numbers.)

We could write that as such:

- A is a subset of ( included in) B:

- Conversely, B is a superset of (or includes) A:

Now why would I care if set B contains all of set A? Good question.

Imagine that one set contains all the people who live in the United States, along with their age, address, etc. Now imagine that the other set contains people who have higher incidences of heart disease. The overlap of the two sets could tell me what areas of the country have more problems with heart disease.

Each set has elements inside of it. What’s an element? Just a part of the bigger set. Let’s take a look at our tensor again.

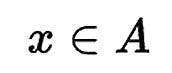

We denote elements of a set with a small italics variable, such as x. We use the weird looking E like symbol (though not an E) to denote that an element is a part of a set. We could write that as such:

That means x is an element of set A.

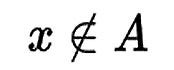

We could also say x is not an element of A:.

The better you get at reading these symbols the more you can intelligently “talk through” the string of characters in your mind. When you see the above now you can say “x is not an element of set A”. The better you can articulate what you’re reading the closer you are to understanding it.

Now, of course it’s impractical to write out all the elements of a set, so we might write out all the elements in a series in a special way. So let’s say we had a series of numbers increasing by one each time. We would write that as:

x = {1,2,3,4…n}

The dots just mean that the series continues until n, where n is a stand in variable for the “end of the series.” So if n = 10, the set contains the range of numbers from 1 to 10. If n = 100, that is the range of numbers from 1 to 100.

Equation Crazy

Sets are interesting when we transform them with linear algebra. You already know most of the major algebra symbols like + for addition and — for subtraction.

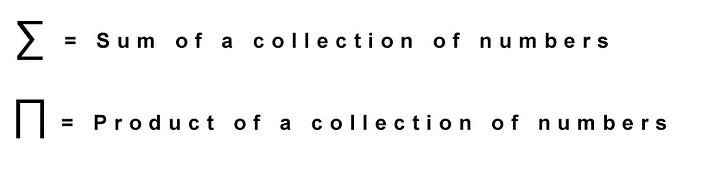

Let’s look at two new symbols and an equation. First the symbols:

What is a sum? It’s the addition of all the numbers in a series. Let’s say we had a vector set A (remember that a vector is a single row or column of numbers) that contains: {1,2,3,4,5}.

The sum of that series would be:

1 + 2 + 3 + 4 + 5 = 15

The product is multiplication of all the numbers. So if we take the same set A we get:

1 x 2 x 3 x 4 x 5 =120

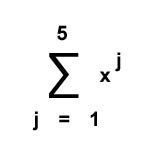

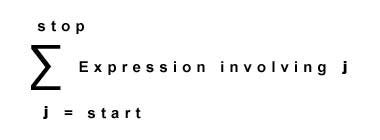

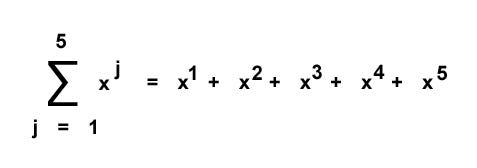

We can represent an sum equation on a series of numbers compactly like this:

So how do we read that? Simple. Check this out:

We start at the bottom with j, which is a variable. We move to the right and plug j into our expression. Lastly, we know the series stops at the number given on the top. Let’s see an example.

If you’re a programmer, you might recognize this is a for loop!

Let’s create a little function for this equation in Python:

j = 1

output = [] # creates an empty list

for k in range(0,5): # starts a for loop

z = x**j # raises x to the power of j

j = j + 1 # raises j by 1 until it hits n which is 5

output.append[z] # append the output to a list

return sum(output) # sum all the numbers in the list

Forgive my hideous Python folks, but I’m going for clear, not compact.

The ** symbol means to raise to the power of j. The function takes the variable x, which I supplied as 2. It then loops from 0 to 5 raising x by the power 1,2,3,4, 5 and appending those numbers to a list. It then runs a sum on that list to get the answer: 62

Enter the Matrix

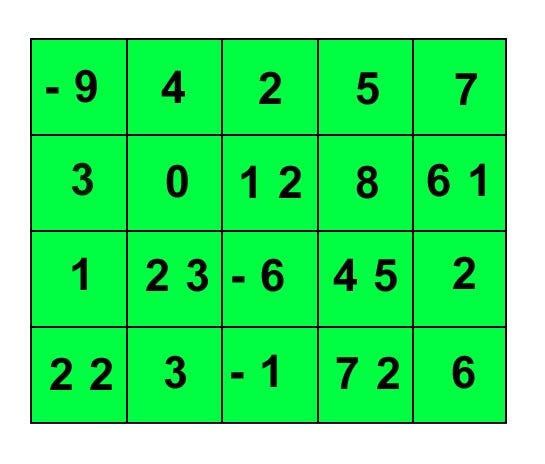

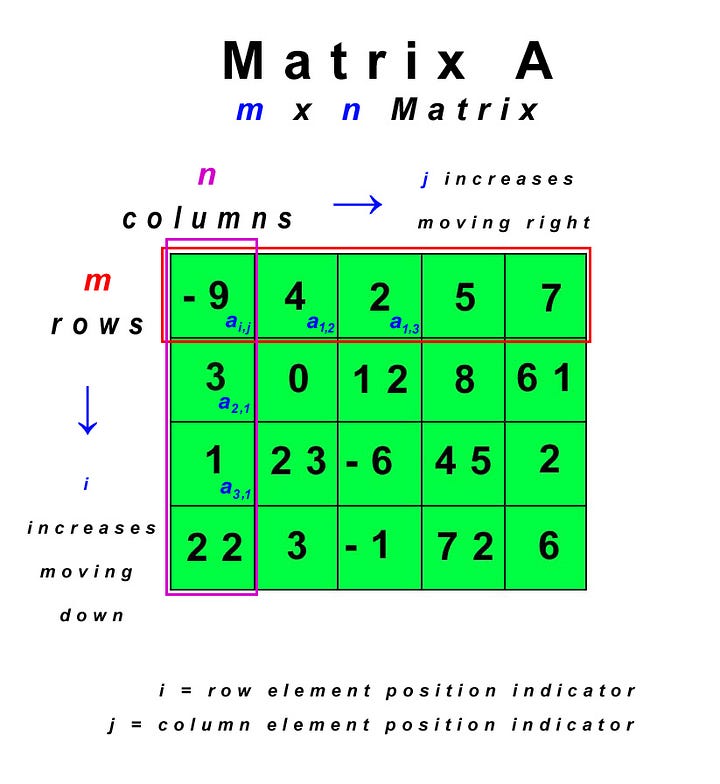

Remember that a 2D tensor is called a matrix. It’s basically a spreadsheet, with rows and columns. First, you need to know how to refer to different parts of the matrix. This graphic lays it all out for you:

To start we have a matrix A, which is denoted by a capital letter.

That matrix has m rows and n columns, so we say it is an m x n matrix, using small, italics letters.

Rows are horizontal, aka left to right. (Don’t be confused by the arrows, which point to i and j NOT the row’s direction. Again rows are horizontal!)

Columns are vertical, aka up and down.

In this case we have 4 x 5 matrix (aka a 2D tensor) because we have 4 rows and 5 columns.

Each box is an element of the matrix. The position of those elements is indicated by a little italics a as well as a row indicator i and a column indicator j.

So the 4 in the top row, second column is indicated by a1,2. The 3 in the second row, first column is a2,1.

We won’t have time to go over all the types of matrix math here, but let’s take a look at one type to get your feet wet.

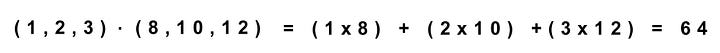

The dot product is a very common operation in neural networks so let’s see it in action.

Dot, Dot, Dot

The dot product is how we multiply one matrix by another matrix.

The dot product operation is symbolized by, you guessed it, a dot.

a . b

That’s the dot product of two scalars (aka single numbers), which are individual elements inside our matrix.

We multiply matching elements between matrices of the same size and shape and then sum up.

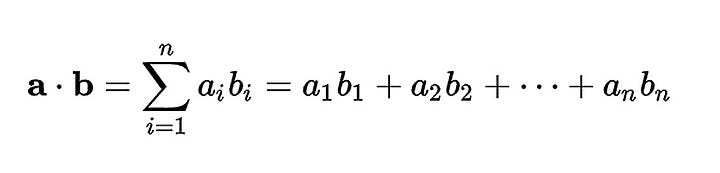

Want to see the formula for multiplying one vector by another?

Take a deep breath. You got this!

We know all these symbols now.

This is the formula for multiplying two equal length vectors. Remember from part 4 of Learning AI if You Suck at Math — Tensors Illustrated with Cats that a vector is a single row or column of numbers. Each row or column is an individual vector in our matrix.

Basically we start at element one in matrix A and multiply it by element one in matrix B. Then we move on to element A2 multiplied by element B2. We do this for all the elements until we reach the end, “n”, and then sum them up (aka add them together).

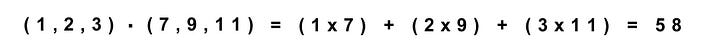

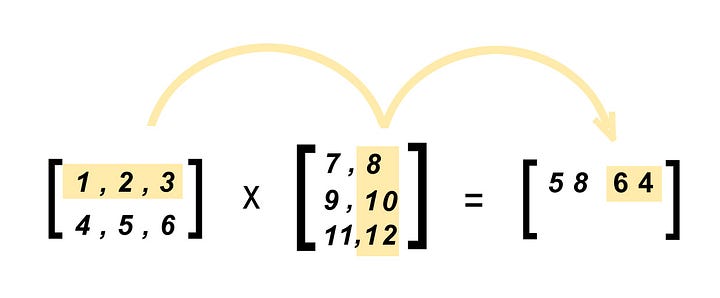

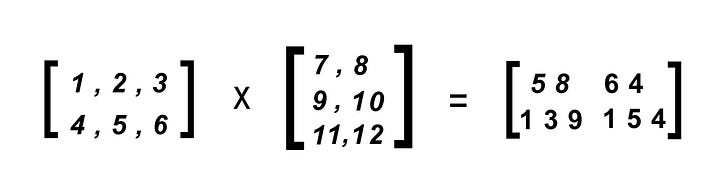

Let’s see a visual representation of that in action.

Now we can plug these numbers into our formula:

Here’s another example for the next number in the output matrix.

Here’s the final matrix after we’ve done all the math:

These examples come from the amazing Math is Fun website. That site has a ton of great examples. I haven’t found any place that does it better so far.

I added in the formulas to aid your understanding of reading formulas since they tend to skip over those so as not to confuse people. But you don’t have to be confused anymore.

Winning Learning Strategies

I want to finish up with a few strategies to help you learn faster.

I’m an autodidact, which means I like to teach things to myself. I learn better when I have time to slow down and time to explore on my own. I make mistakes. My last article was a good example, as I had to correct a few bits.

But here’s the thing about mistakes: They’re a good thing!

They’re part of the process. There’s no getting around them, so just embrace them. If you’re making mistakes you’re learning. If you aren’t, you aren’t! Simple as that.

There’s an old joke in engineering.

If you want to get the right answer, don’t ask for help. Instead post the wrong answer and watch how many engineers jump in to correct you!

Engineers just can’t let wrong answers stand!

It’s an old trick but it works well.

It’s also important to note that you probably can’t read the Mathematical Notation book unless you’ve plugged your way through some of the other books I outlined in Learning AI if You Suck at Math — Part One or if you have a background in calculus, algebra and some geometry. You need a background for the terminology to make sense. However, I recommend getting the book anyway, because as you work through the other books, you can use it as a reference guide.

I also recommend taking a slow approach. There’s no race here! You get no points for half-assing it. If you skip over a term you don’t understand, you’ll only end up having to go back to it.

So STOP, take your time to look up EVERY symbol you don’t understand. It’s a slow and at times frustrating approach. But as you build up more and more knowledge it starts to go faster. You’ll find yourself understanding terms you never imagined you could ever understand.

Also, know that you’ll have to look things up from multiple locations. Let’s face it, most people are not good teachers. They may understand the material but that doesn’t mean they can make it accessible to others. Teaching is an art. That’s why the Math is Fun site is vastly superior to Wikipedia when you are starting out. Wikipedia is “correct” but often dry, hard to understand and sometimes confusing. As you learn more about this maybe you can even make Wikipedia better.

Keep all these things in mind and you can’t go wrong in your AI learning adventures!