credit: https://www.housetohouse.com/diamonds-in-the-rough/

In startup lingo, a “vanity metric” is a number that companies keep track of in order to convince the world — and sometimes themselves — that they’re doing better than they actually are.

To pick on a prominent example, about eight years ago Twitter announced that 200 million tweets per day were being sent on its app. That’s a big number, but it’s not as relevant as it might seem: a large fraction of these Tweets were sent by bots. Besides, what really matters to Twitter’s long-term viability as a company isn’t number of tweets sent per day; it’s the number of daily active users, and the number of ads you can show them before they leave the site.

Vanity metrics are everywhere, and they can really hold us back when we optimize for them, rather that optimizing for something that matters. They cause us to spin our wheels, and not understand why our hard work isn’t leading to results.

Now, I work at a data science mentorship startup. As part of my work, I review a lot of GitHub portfolios put together by aspiring data scientists. And it’s become hard to ignore one particular vanity metric that many people seem to be optimizing for at the cost of making actual progress: the number of data science projects in their portfolio. From what I’ve seen, a large fraction of aspiring data scientists get stuck in a spiral that involves building more and more data science projects with tools like sklearn and pandas, each featuring only incremental improvements over the last.

What I want to explore here is a way to break out of that spiral, by using some key lessons we’ve learned from seeing dozens of mentees leverage their projects into job interviews and ultimately, into offers.

So without further ado, here it is: a step-by-step guide to building a great data science project that will take you beyond the scikit-learn/pandas spiral, and get you interviews as fast as possible.

0. Key project design principles

Before we get into specifics, here are some general principles that we’ve found maximize the odds that a project will translate into an interview or offer:

- Your project should be original.

- Your project should prove that you have as many relevant skills as possible.

- Your project should make it clear that you can do more than crunch numbers.

- Your project should be easy to show off.

Now let’s look at how these four principles translate into concrete project features.

1. Where to get your data

Deciding on which dataset you want to work with is one of the more critical steps in designing your project.

As you do this, always remember Principle 1: your project should be original.

Technical leads and recruiters who parse resumes for a living see hundreds of CVs a day, each with 3 or 4 showcased projects. You don’t want your resume to be the 23rd they’ve seen today that features an analysis of the Titanic Survival Dataset or MNIST. As a rule, stay away from popular datasets, and stay even farther away from “training wheel” datasets like MNIST. As I’ve discussed before, citing these on your resume can hurt you more than it helps you.

Something else to keep in mind is Principle 2: your project should demonstrate as many relevant skills as possible.

Unless you work at a massive company, in which your work is highly specialized, the data you’ll work with in industry is probably going to be dirty. Very dirty. Poorly designed schemas, ints that should be floats, NaNvalues, missing entries, half-missing entries, absent column names, etc., etc. If you want to convince an employer that you have all the skills it takes to be productive on Day 1, using dirty data is a great way to go. For this reason, I generally suggest avoiding Kaggle datasets (which are often pre-cleaned).

So what’s left if you can’t use Kaggle either? Web scraping. And lots of it.

Use a library like beautifulsoup or scrapy, and scrape the data you need off the web. Either that or use a free API to build a custom dataset that no one else will have — and that you’ll have to clean and wrangle yourself.

Yes, it takes longer than importing the Iris dataset from scikit-learn. But that’s exactly why so few people do it — and why you’re more likely to get noticed if you do.

2. What to do before you use model.fit()

“Training wheel” datasets usually have one or two obvious applications.

For example, the Titanic Dataset seems to present a clear classification problem: it’s hard to look at it without thinking that the most obvious thing you could do is try to predict whether a given person will survive the disaster.

But industry problems aren’t always like that. Very often, you’ll have a bunch of (dirty) data, and you’ll need to figure out what to do with it to generate value for your company. That’s where Principle 3 comes in: an ideal project is one that demonstrates not only that you’re able to answer important data science questions, but that you’re capable of asking them, too.

In general, how do you come up with good questions to ask? A good start is to do data exploration.

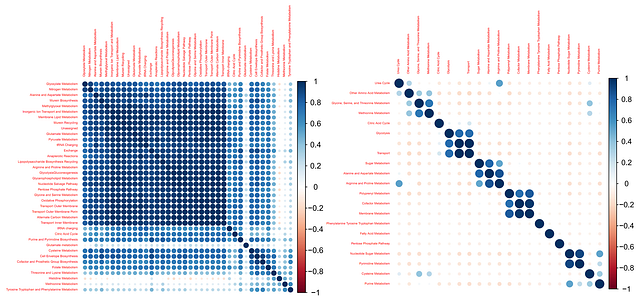

Scatter plots, correlation matrices, dimensionality reduction and visualization techniques should almost always be involved in this step. They help you to understand the data that you’ve collected so that you can think of good questions to ask as you move to the machine learning/prediction phase of your project, and have the added benefit of satisfying Principle 4, by providing you with plots that are easy to show off to interviewers and recruiters before and during your interviews.

It’s easy to downplay the importance of data exploration, but it really is one of the most mission-critical steps of any good data science project. Ideally, data exploration alone will result in one or two surprising insights—and those insights will make great fodder for discussion during your technical interviews.

3. The main result

credit: screenshot from https://www.youtube.com/watch?v=BxizdTrItTk

As you’re deciding what you want the main output of your project to be (i.e. what predictions you want to return, or what kinds of conclusions you want to be able to draw as your project’s main result), it’s important to keep Principle 4 in mind: whatever that output is, it’s best if it’s easy to show off, fun to play with, or both.

For this reason, I recommend exposing your project to the outside world as a web app (using Flask, or some other Python-based web dev framework). Ideally, you should be able to approach someone at a Meetup or during an interview, and have them try out a few input parameters, or play with a few knobs, and have some (ideally visually appealing) result returned to them.

Another advantage to deploying your final model as a web app is that it’ll force you to learn the basics of web development, if you don’t know them already (if you already do, then it will provide evidence to employers that that’s the case). Because most product-oriented companies use web dev at some point in their product, understanding server/client architectures and basic web dev can help you integrate your model into a production setting more easily if that becomes necessary.

4. The pitch

Once you’ve built your project, you’ll want to start pitching it to potential employers. And that pitch should involve more than just flashing your phone screen in front of someone and telling them to play with a few knobs.

To make your project pitch compelling, make sure you have a story to tell about what you’ve built. Ideally, that story should include one or two unexpected insights you gained during your data exploration or model evaluation phase (e.g. “it turns out this class is really hard to tell apart from this other class because [reasons]”).

This helps you out because it:

- Weaves a narrative around your project that’s easier (and more interesting) for interviewers to remember; and

- Makes it clear that you’re someone who makes a point of getting to the bottom of your data science problems.

Iterate

As you shop your project around to potential employers and show it off to other data scientists, get as much feedback as you can and iterate on your project to increase the impact that it makes.

And when you decide which improvements to make to your project as a result of that feedback, prioritize things that involve learning new skills, favoring those skills that are most in demand at the time. Develop your project fully, rather than spreading yourself out thinly, and you’ll eventually end up with a well-rounded skillset.

But most of all, you’ll have spared yourself the time sink of mindlessly optimizing for a vanity metric, like the total number of projects you’ve built, so you can focus on the only metric that counts: getting hired.