Top articles, research, podcasts, webinars and more delivered to you monthly.

The 5-Step Recipe to Make Your Deep Learning Models Bug-Free

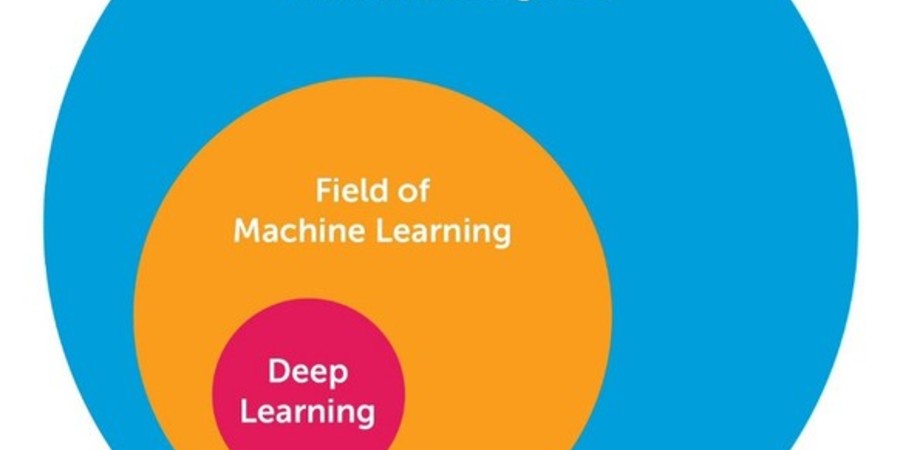

Deep learning troubleshooting and debugging is really hard. It’s difficult to tell if you have a bug because there are...